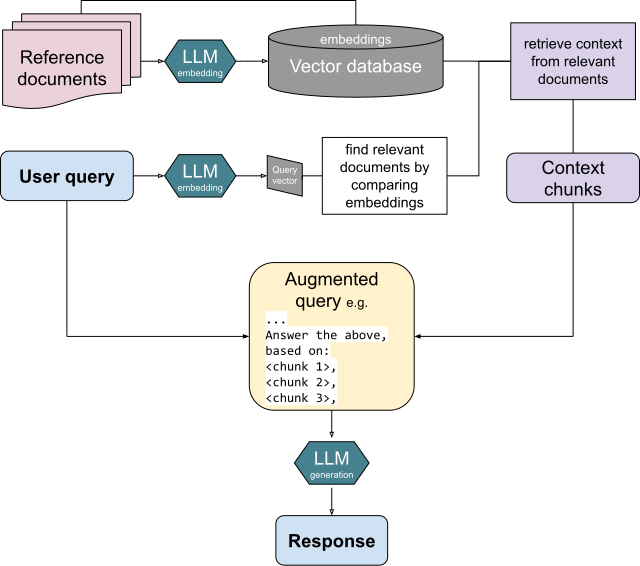

A high-level overview of the Retrieval-Augmented Generation process: combining retrieval and generation to produce informed responses.

In the evolving landscape of machine learning and artificial intelligence, one concept gaining significant traction is Retrieval-Augmented Generation (RAG). If you’re familiar with advanced AI models, you might have heard about RAG and its use in enhancing performance by pairing generative and retrieval components. For those planning to implement RAG, it’s essential to understand how it works in depth, along with the potential pitfalls of a poorly understood implementation.

What is RAG?

Retrieval-Augmented Generation, or RAG, is an advanced architecture designed to improve language models. Unlike traditional generative models that attempt to produce responses purely based on their pre-existing training, RAG leverages external knowledge to generate more precise and context-aware outputs. Essentially, RAG blends two distinct components:

- Retriever: A mechanism that searches an external knowledge base or dataset to find the most relevant information, enhancing context.

- Generator: A language model, such as GPT, that uses both the retrieved context and its own learned information to produce more accurate and comprehensive responses.

This combined approach addresses a key limitation of standard language models: the fixed knowledge cutoff. By pulling in external information dynamically, RAG significantly improves the accuracy and reliability of the generated content, especially in domains requiring up-to-date or domain-specific knowledge.

Key Benefits of RAG

- Dynamic Knowledge Updates: RAG can access information stored in databases, thus avoiding the issues caused by a fixed training cutoff. This is especially useful when dealing with recent events or niche topics.

- Domain-Specific Information: The retriever component can be configured to focus on a particular dataset, enabling fine-tuned domain expertise, even if the original language model doesn’t specialize in that domain.

- Improved Response Quality: By combining retrieval with generative capabilities, RAG provides more accurate, coherent, and contextually rich responses, reducing the likelihood of irrelevant or misleading content.

Why Thorough Knowledge is Essential When Implementing RAG

Implementing RAG can bring numerous advantages, but it requires a solid understanding of how both retrieval and generation elements interact. RAG is not a simple plug-and-play model—it requires careful orchestration of both components to achieve the desired results. The retriever and generator should work in tandem, meaning:

- Retriever Quality is Paramount: The quality of the results generated by RAG depends on the effectiveness of the retrieval component. If the retriever is poorly designed or inadequately tuned, it will feed inaccurate or irrelevant context to the generator. This not only diminishes the performance but can lead to highly misleading outputs.

- Index Management: The retriever depends on indexed data to find relevant context. Building a quality index requires thoughtful curation of the dataset. If you don’t consider the structure and reliability of the data being indexed, your system may end up pulling erroneous or outdated information.

- Handling Latency: Implementing retrieval adds an extra processing layer, which could increase response times. Without careful optimization, latency issues may make the system impractical for applications requiring real-time interactions.

Potential Issues with Poor Implementation

A lack of thorough understanding of RAG’s complexities can lead to several critical issues:

- Garbage In, Garbage Out: If the retriever isn’t properly calibrated, it may feed irrelevant or incorrect information into the generative model. The adage “garbage in, garbage out” applies—the generator cannot compensate for flawed input data and will produce inaccurate or irrelevant responses.

- Conflicting Information: Without thorough filtering or quality control, the retrieved content may conflict with the model’s internal knowledge, resulting in confusing or misleading responses. This is particularly concerning in applications like customer support, healthcare, or education, where consistency is crucial.

- Complex Integration: Implementing RAG requires robust infrastructure—ranging from maintaining efficient retrieval databases to integrating a generative model that can handle the inputs appropriately. If the setup is not carefully planned, it may lead to a system that is overly complex, hard to maintain, or prone to frequent breakdowns.

- Scalability Concerns: While retrieval components may work well with smaller datasets, scaling up without efficient indexing, memory management, or optimization techniques can quickly lead to bottlenecks, severely limiting the usefulness of the system.

Best Practices for Implementing RAG

- Optimize the Retriever: Choose the right algorithms for retrieving the best content based on your requirements. Use specialized tools like Dense Passage Retrieval (DPR) or BM25 to enhance efficiency.

- Curate Your Knowledge Base: The quality of the information retrieved depends heavily on the knowledge base. Ensure your corpus is clean, relevant, and up-to-date.

- Latency Optimization: Keep an eye on latency issues. Use caching mechanisms and fast retriever models to reduce the overall response time.

- Iterative Testing and Feedback: Implementing RAG should be iterative. You need to test and validate both retriever and generator components continually to ensure they are working seamlessly together.

Final Thoughts

RAG is an exciting advancement in the AI world, allowing us to overcome the limitations of traditional language models. However, it demands a deep understanding of the interplay between its retrieval and generation components. One cannot afford to skip the fundamental concepts when implementing it—doing so could lead to systems that are inaccurate, unreliable, and hard to maintain.

If you are considering using RAG, make sure to invest the time to understand its internal workings thoroughly. The rewards for proper implementation are immense—more accurate, current, and context-aware responses—but so are the risks if mishandled. RAG isn’t just a “nice to have”; it’s a powerful tool that, if used correctly, can significantly elevate the effectiveness of conversational AI.